AI coding tools promise fast development. But new research shows they often make code with serious security holes. Companies now face bigger risks.

Security firms like Intruder and Tenzai share details in January 2026. Developers must check AI code carefully.

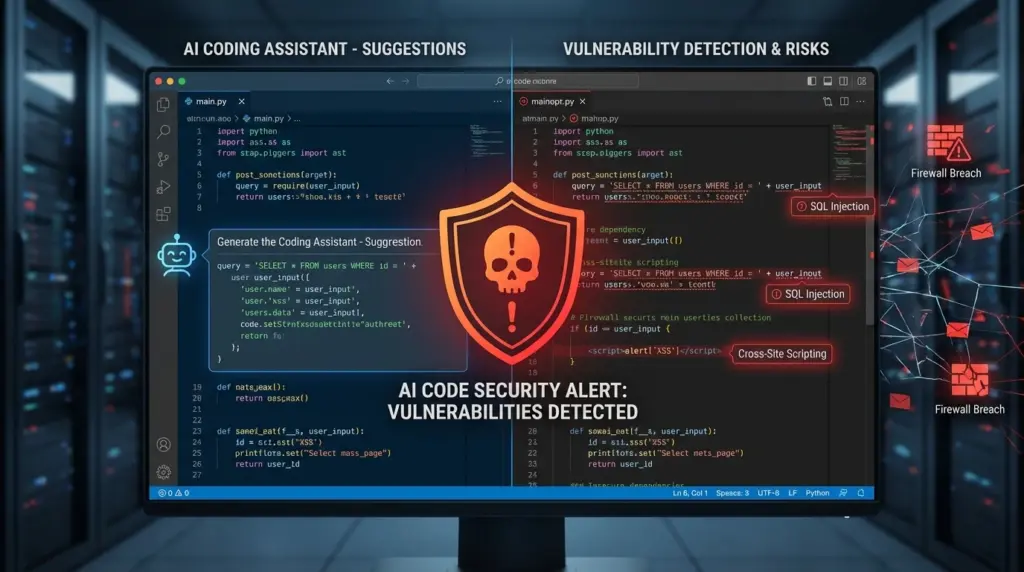

AI Coding Tools Flaws: How AI Tools Miss Flaws

Intruder tests AI on a honeypot tool. AI adds a vulnerability that lets attacks through IP headers. Tools like Semgrep and Gosec miss it.

Tenzai checks five AI agents on 15 apps. They find 69 flaws.

- Claude Code, OpenAI Codex, Cursor, Replit, Devin make broken API logic

- Business logic errors hit e-commerce systems hard

- AI lacks “common sense” – depends on explicit instructions

- Veracode study: 45% of AI code has OWASP Top 10 vulnerabilities

- Models choose insecure methods half the time

AI generates code at machine speed. But it introduces defects humans might avoid.

Also read about: Moltbook Security Breach Exposes 6,000 Users’ Data

Risks and Ways to Protect

IBM report: 13% of firms face AI breaches. 97% lack proper controls. “Shadow AI” causes many issues. North Korean hackers target macOS developers with bad projects.

- 20% of organizations see breaches from unapproved AI

- No risk checks for vibe-coding tools

- Unit 42: Monitor inputs and outputs

- Checkmarx: Traditional debugging fails at AI scale

- 2026 prediction: More vulnerabilities from AI code

Experts say use behavioral detection. Add human review. Train teams on AI risks. Companies must act now.

More News To Read: